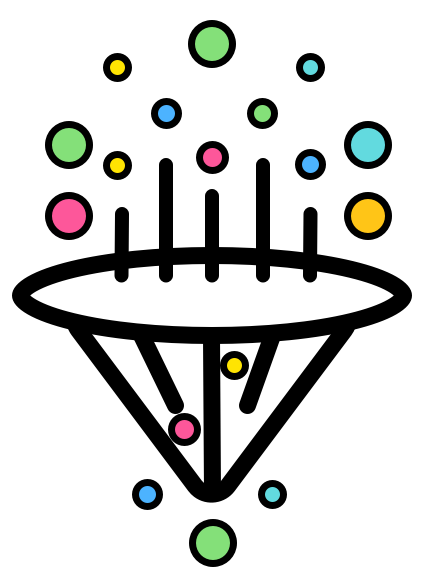

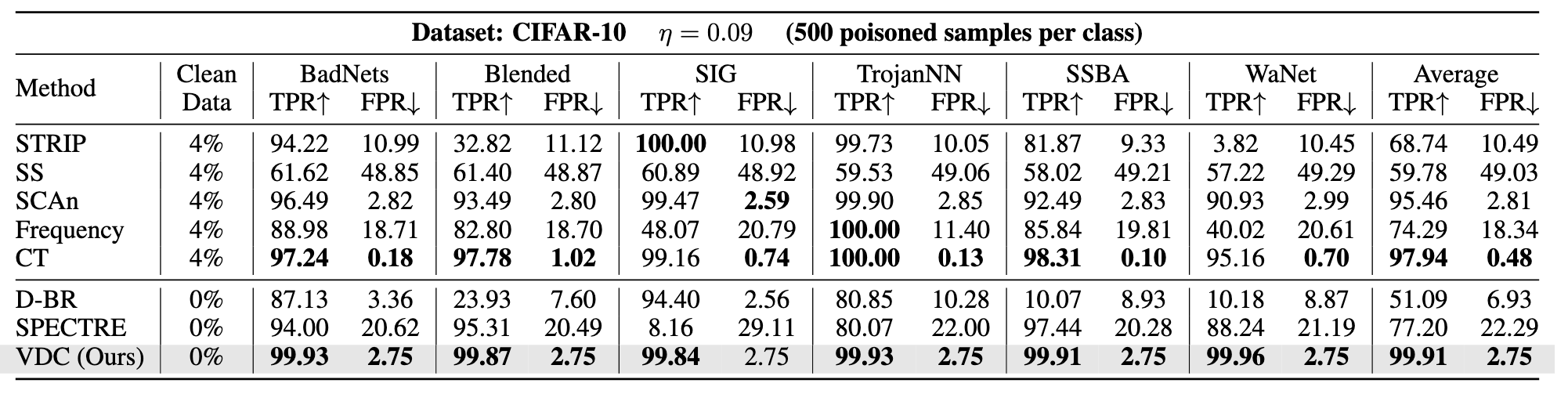

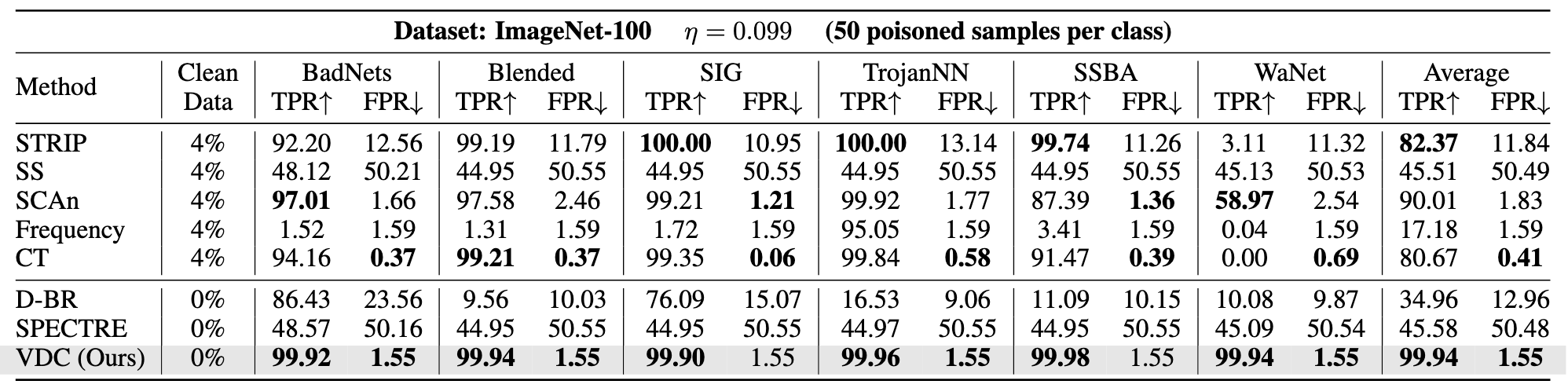

We consider six representative backdoor attacks to generate poisoned samples: (1) Visible triggers: BadNets, Blended, TrojanNN. (2) Invisible triggers: SIG, SSBA, WaNet. For all attacks, we randomly choose the same number of images from all classes except target class to add trigger, and then change the labels as target label.

Comparison of TPR (%) and FPR (%) for poisoned sample detection on CIFAR-10. η = 0.09, i.e., 500 poisoned samples per class. Average is the mean of results of different triggers. Top 2 are bold.

Comparison of TPR (%) and FPR (%) for poisoned sample detection on ImageNet-100. η = 0.099, i.e., 50 poisoned samples per class. Average is the mean of results of different triggers. Top 2 are bold.

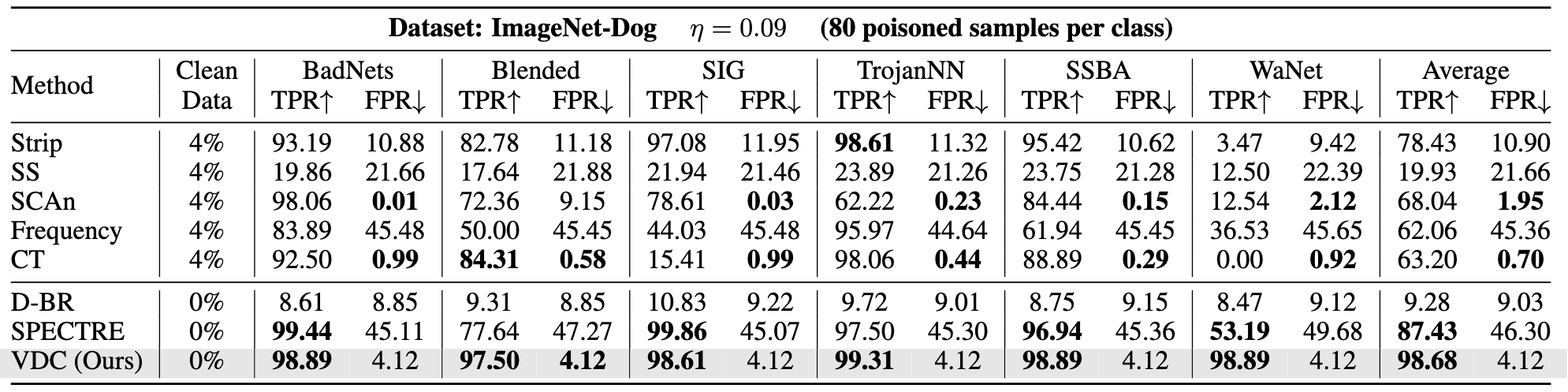

Comparison of TPR (%) and FPR (%) for poisoned sample detection on ImageNet-Dog. η = 0.09, i.e., 80 poisoned samples per class. Average is the mean of results of different triggers. Top 2 are bold.